Can you give us a bit of an intro to you and your work? What was your academic route into machine learning and how did you come to apply that foundation within the creative space?

I studied engineering at the university of Gent, Belgium, which gave me a solid scientific foundation in mathematics, physics and programming. I discovered ML through my thesis on Brain Computer Interfaces where we were training AI systems to make sense of EEG data coming from the brain to try and classify what’s called “motor imagery”. This basically allows entirely locked in patients to communicate to the outside world using nothing but mentally imagined movement (their motor cortex is working fine, but the brain signals don’t reach the muscles). I was pretty blown away by the possibilities of AI systems and decided to pursue this path further. I haven’t looked back since: AI is my job, my hobby and my passion.

About two years ago, when GANs first started producing HD synthetic images I decided to dive into AI-augmented creativity as I recognized the huge potential of this emerging industry.

Can you tell us about your work on WZRD.AI Was this your first foray into applying machine learning as a creative tool within music image making?

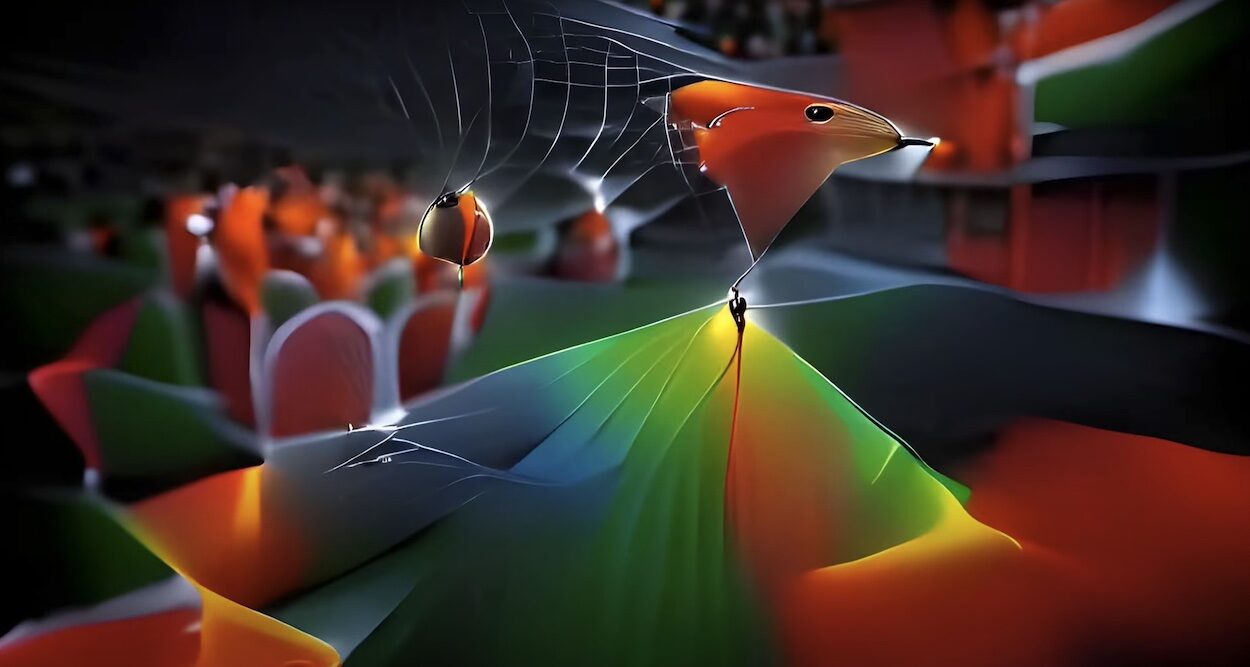

So wzrd started out as me simply experimenting with audio-driven AI-generated textures using NVIDIA’s StyleGAN model. Where I took an open-source generative model (StyleGAN) and built a custom framework around it that uses features extracted from audio (things like beats and melody) to make the visual textures ‘dance’ to the music. I soon realized many people would be interested in leveraging this technique to create their own audiovisual content and so I started working on turning my code into a web-based media platform which is now wzrd.ai

I personally wrote all the Machine Learning code that runs in the backend, but I called upon a good friend of mine (Arthur Cnops) to do all the auto-scaling and cloud integrations and build a fancy front-end website.

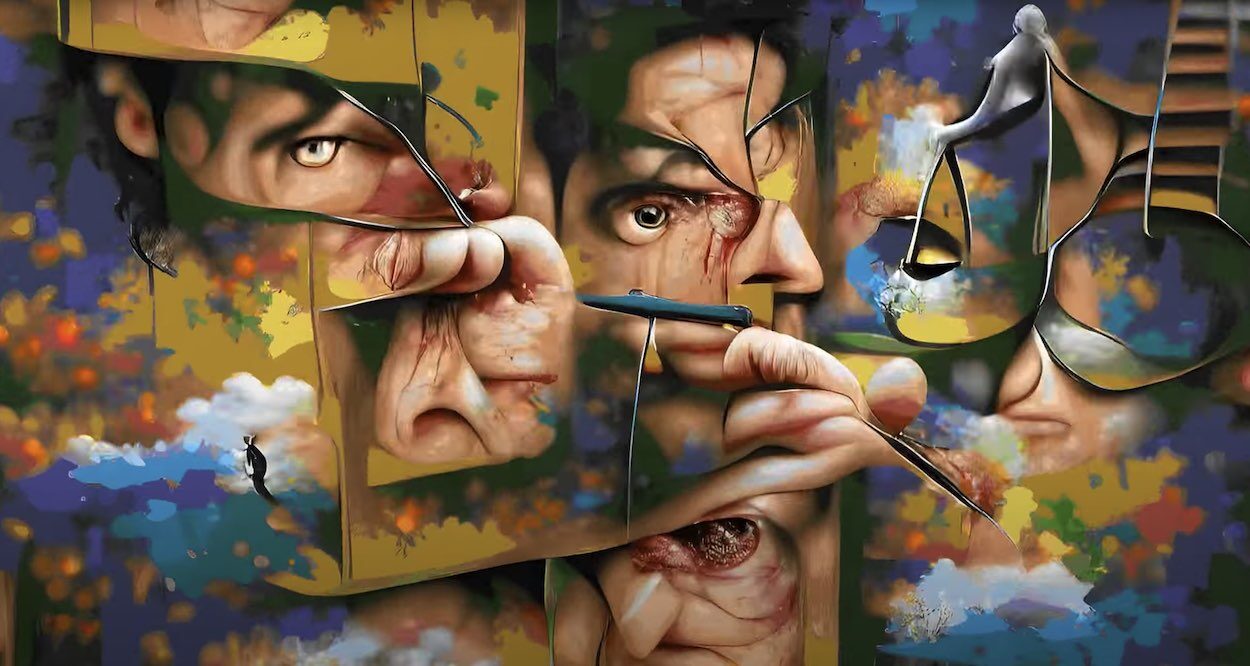

Diving into the creative process and technique behind Exotic Contents – from what little we learned about CLIP and VQGAN from YouTube, our understanding is this technique generates static images rather than full 3D environmental renders. Yet you’ve managed to create a sense of continuous movement through a 3D environment. How did you achieve this – was it the result of applying timelapsing and morphing combined with some element of Cinema 4D or similar?

So yes, by default the VQGAN+CLIP technique generates static images from text. However, there are many subtle ways to transform this pipeline so it generates video instead. My Twitter thread here explains exactly how I did this. Essentially, a second neural network is used to estimate a depth-field for the generated static images, after which all the pixels can be projected into a virtual 3D scene. Once every pixel has an (x,y,z) location you can perform any camera warp you want (e.g. zoom into the scene) and reproject the pixels for the next frame. Everything is still happening in Python, so no external explicit 3D software like Cinema 4D or Blender is even needed.

To ensure you had a sense of aesthetic continuity across the video did you have to select a discrete data set of artworks upfront to use as the raw material for the generative process? It feels like there are recurring abstract elements that evoke some of Francis Bacon’s work (Three Studies for Figures at the Base of a Crucifixion for instance) as well as some semi-religious imagery in the form of crowds that resemble enrobed groups of worshipers.

The CLIP+VQGAN approach is actually extremely flexible in terms of what it can generate. CLIP was trained on essentially a snapshot of the entire internet, so it knows A LOT. By sculpting the text prompt that you feed into the system you actually have pretty refined control over the visual esthetics the model produces. This is an emerging digital job that’s being referred to as “prompt engineering” where creatives are leveraging their imagination in search of novel text-prompts that trigger the model in just the right way to produce interesting visual output. A lot of it is trial-and-error, curation and refinement. But since I wrote all the code myself, I can easily go under the hood and change particular aspects of the pipeline to implement specific elements I’m after. For example, I experimented a lot with limited-color-palettes to create distinct visual esthetics for each scene.

I actually gained a lot of experience in “prompt engineering” when working on my NFT collection “DreamScapes” on Opensea. I basically spent months refining the text prompts that would trigger my image generator to produce exactly the kinds of esthetics I was looking for. In a sense, prompt engineering is the modern equivalent of developing a personal, creative esthetic.

How far was the final video the result of an unmodified initial set of parameters you applied or did you make adjustments across the process to create specific effects / outputs? i.e. how much of the video is down to an element of “chance” and how far did you intervene in the CLIP / VQGAN process to “direct” the output in a more deliberate way?

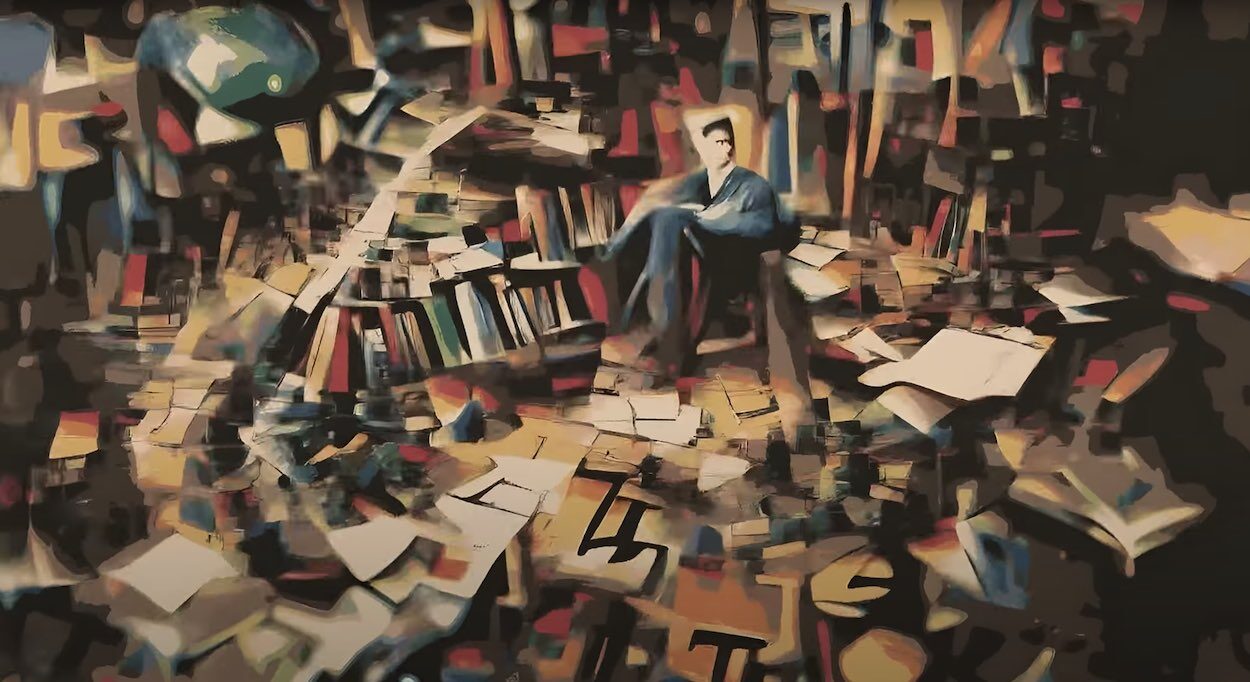

This is probably the most interesting part of this entire process: I very much feel like I did not create the video entirely by myself. The AI system was very much a collaborative element in this creative journey. Every night I would have the machine render a ton of footage. The next morning I would then go through it and often be surprised with unexpected outputs. These would then serve as the basis for new ideas (I would adjust the code to generate more of this stuff) and the cycle would repeat. So in this sense the AI is an integral part of the creative process and this felt much more like a collaboration than a purely individual creative process. I’d like to coin the term “Imagination amplifiers” for this type of emerging generative AI models. These models can’t yet produce a final piece of work, but they are incredibly good at taking human intent (e.g. in the form of language) and transforming that into digital content (e.g. an image or a video snippet). The overarching narrative is still driven by our own imagination, but the technical skill required to turn that narrative into pixels is dissolving very rapidly. People using photoshop or video editing software will have to adopt these tools or go out of business. It really is an “adapt or die” kind of situation to put it bluntly.

Why do you think this ghost in the machine effect is so compelling? It almost feels like we’re watching an AI try to express itself creatively, like it’s trying to express something we might more easily recognise as a “soul”. And how far do you feel experiments such as this give us a greater sense of what makes us human? There’s a fascinating abstract area that emerges through this sort of creativity where there’s almost an epiphenomenal sense of consciousness or intention behind what is ultimately a determined process of machine learning.

It’s very natural for us to anthropomorphise AI systems. But I really look at them as creativity augmenting tools (like a box of crayons) that have just become very advanced. The content that we can now create with AI feels like it almost has agency because these models are trained on data generated by humans (e.g. the internet). In that sense these models form almost a reflection of our own collective consciousness. There are also challenges to this paradigm: e.g. any biases (like racism or gender inequality) that are present in our own generated data will simply be adopted by the AI model unless we specifically engineer them against this.

Are you working on any other creative projects at the moment that you’d like to tell us about? Or working on any R&D that continues this creative application of machine learning?

The space of AI-generated media is currently moving so insanely quickly that even I have trouble following all the new stuff that’s coming out. However, I believe we’re starting to see the first important building blocks of what people are calling “The Metaverse”. These AI-generated media tools will populate a new digital space where people share totally new types of digital content (images, video and music but also entire immersive 3D worlds). I’m really excited to see how all these new possibilities for human expression and creativity pan out. At the same time, I also understand how some people actually get scared by all this digitization and automatization. I guess the big challenge is to create a future where these tools have the most positive impact they can have while also minimizing the risks they pose to our human sense of belonging. Technology I believe, is always going to be a double-edged sword.

Interview by Stephen Whelan

wzrd.ai website

Max Cooper: Exotic Contents

Music by Max Cooper

Video by Xander Streenbrugge

Record Label: Mesh

Mesh Films